Why Google ‘Thought’ This Black Woman Was a Gorilla

Why Google ‘Thought’ This Black Woman Was a Gorilla

This is a story about an incident that happened to 22-year-old freelance web developer Jacky Alciné, the racist slur that caught him off-guard, and the machines behind it.

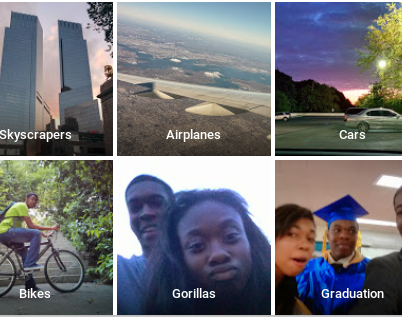

You may have heard about it earlier this summer: On Sunday, June 28, Jacky sat relaxing, half-watching the BET awards and messing around on his computer. It was just a normal evening, until a selfie from his friend popped up in the Google photo app. As Jacky scrolled through his photos, he realized that Google has rolled out “photo categorization” – his pictures had automatically been labelled and organized based on what was in them.

His brother’s graduation? No problem, Google’s software totally figured it out. But as he kept scrolling, he came upon a series of photos of himself and a friend at a concert.

But the label didn’t say “people” or “concert.”

The label Jacky saw on all of the pictures he had taken with this particular friend.

(Jacky Alciné/Twitter)

The label Jacky saw on all of the pictures he had taken with this particular friend.

(Jacky Alciné/Twitter)

“It says ‘gorilla,’ and I’m like ‘nah,’” Jacky says. “[It’s] a term that’s been used historically to describe black people in general. Like, ‘Oh, you look like an ape,’ or ‘you’ve been classified as a creature,’” Jacky said. “[B]ecause the closer they looked to a chimp… the more black, the more pure the blackness was supposed to be, so they were probably better for cropping, going back to the days of slavery and cattle selling… [and] of all terms, of all derogatory terms to use, that one came up.”

He tweeted at the company and they resolved it within 14 hours.

@jackyalcine Can we have your permission to examine the data in your account in order to figure out how this happened?

— Yonatan Zunger (@yonatanzunger) June 29, 2015

But this isn’t the only example of a machine making a problematically human mistake. Flickr has been fielding complaints for auto-tagging people in photos as “animals,” and concentration camps as “jungle gyms.” According to computer scientist Yoshua Bengio, these stories are just going to keep making headlines.

Because to prevent a machine from drawing a offensive conclusion? You have to teach a machine how complex society is – and it just may be impossible to code around all of the deeply human social pitfalls. As he tells Manoush Zomorodi on this week’s Note to Self:

Yoshua Bengio: “We would have to have people tell the machine why it makes specific mistakes,” Bengio said. “But you would need not just like two or three examples, you would need thousands or millions of examples for the machine to catch all of the different types of errors that we think exists.”

Manoush Zomorodi: So should companies like Google be even using deep learning like this if there is the possibility that these really offensive mistakes can happen?

Bengio: Well, that’s a choice that they have to make. The system can make mistakes and you have to deal with the fact that there will be mistakes.

Google Photo uses a type of artificial intelligence called “machine learning.” Scientists give the machine millions of examples and teach it to start recognizing objects or words. Then, through an even more specific approach called “deep learning,” it trains machines to start seeing patterns in the data, to draw their own conclusions, and to, in a way, think for itself. This enables them to process huge reams of previously unmanageable data.

In many ways, it’s one of the most exciting advances in Artificial Intelligence to date. On this week’s show, however, we’re taking a closer look at how AI could get it so wrong in practice, and why mistakes like this one matter for big tech companies and those of us who use their products.

Manoush and Jacky Alciné take a Note to Self(ie).

(Manoush Zomorodi/Note to Self)

Manoush and Jacky Alciné take a Note to Self(ie).

(Manoush Zomorodi/Note to Self)

Subscribe to Note to Self on iTunes, Stitcher, TuneIn, I Heart Radio, or anywhere else using our RSS feed.